AI Platform Engineering Reference Architecture

In this post, we’ll explore a comprehensive reference architecture for an AI platform. This architecture is designed to support the entire lifecycle of AI applications, from development through deployment, ensuring scalability, efficiency, and robust performance. It builds upon the principles of platform engineering while addressing the unique challenges and opportunities presented by AI workloads.

Core Components of the Architecture

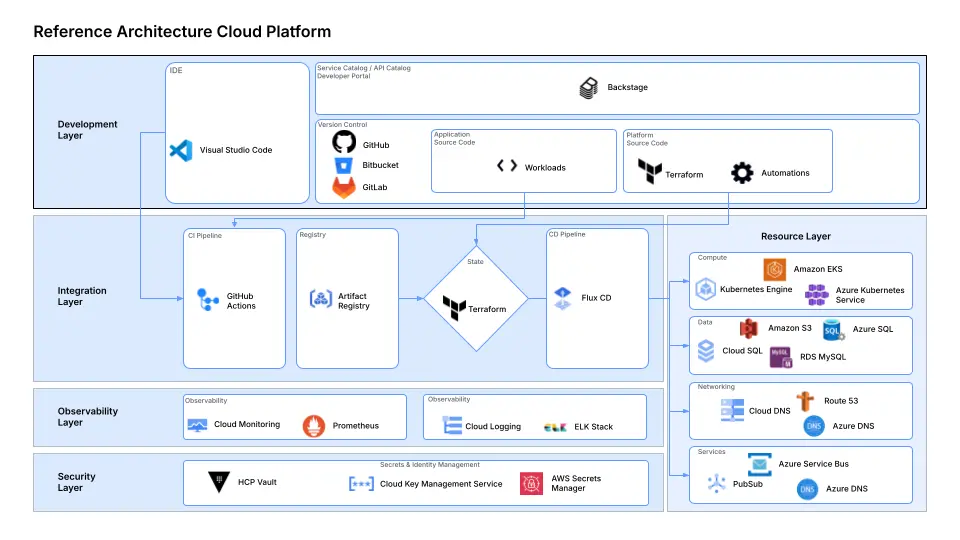

The architecture is structured into five key layers, each critical for different stages of AI development and deployment:

-

Development Layer:

- Service Catalogue: Centralised repositories for AI applications, shared components, prompt templates, and utility scripts. This promotes reuse and accelerates development by providing readily available resources.

- Version Control: Centrally manage code, model configurations, datasets, and AI prompts. Implement branching strategies that enable collaboration and ensuring reproducibility.

- Workloads: Define clear specifications for different AI workloads, such as data preprocessing, model training, hyperparameter tuning, and inference tasks. Use containerisation to encapsulate environments.

-

Integration Layer:

- Infrastructure as Code (IaC): Use tools like Terraform or AWS CloudFormation to provision and manage infrastructure components such as compute instances, storage, and networking. This ensures consistency across environments and enables scalable resource management.

- Continuous Integration (CI): Set up CI pipelines to automate code testing, static analysis, and integration of new features. Incorporate unit tests and integration tests for model code.

- Model Registry: Implement a model registry (e.g., MLflow Model Registry) to track different versions of models, metadata, and performance metrics. This facilitates model governance and simplifies the promotion of models to production.

- Continuous Deployment (CD) Pipeline: Automate the deployment of models and services using CD pipelines. Use blue-green or canary deployment strategies to minimize risks during updates.

-

Observability Layer:

- Monitoring Tools: Integrate monitoring solutions like Prometheus and Grafana to track system metrics (CPU, memory, GPU utilisation) and application-specific metrics (inference latency, throughput). Set up alerts for critical thresholds.

- Logging Solutions: Use centralised logging platforms like the ELK stack (Elasticsearch, Logstash, Kibana) or Cloud-native services to collect logs from different components. Implement log aggregation and analysis to troubleshoot issues effectively.

- Data Drift and Model Performance Monitoring: Deploy tools to monitor data distribution changes over time and model performance degradation. This helps in maintaining model accuracy and retraining when necessary.

-

Augmentation and Fine Tuning Layer:

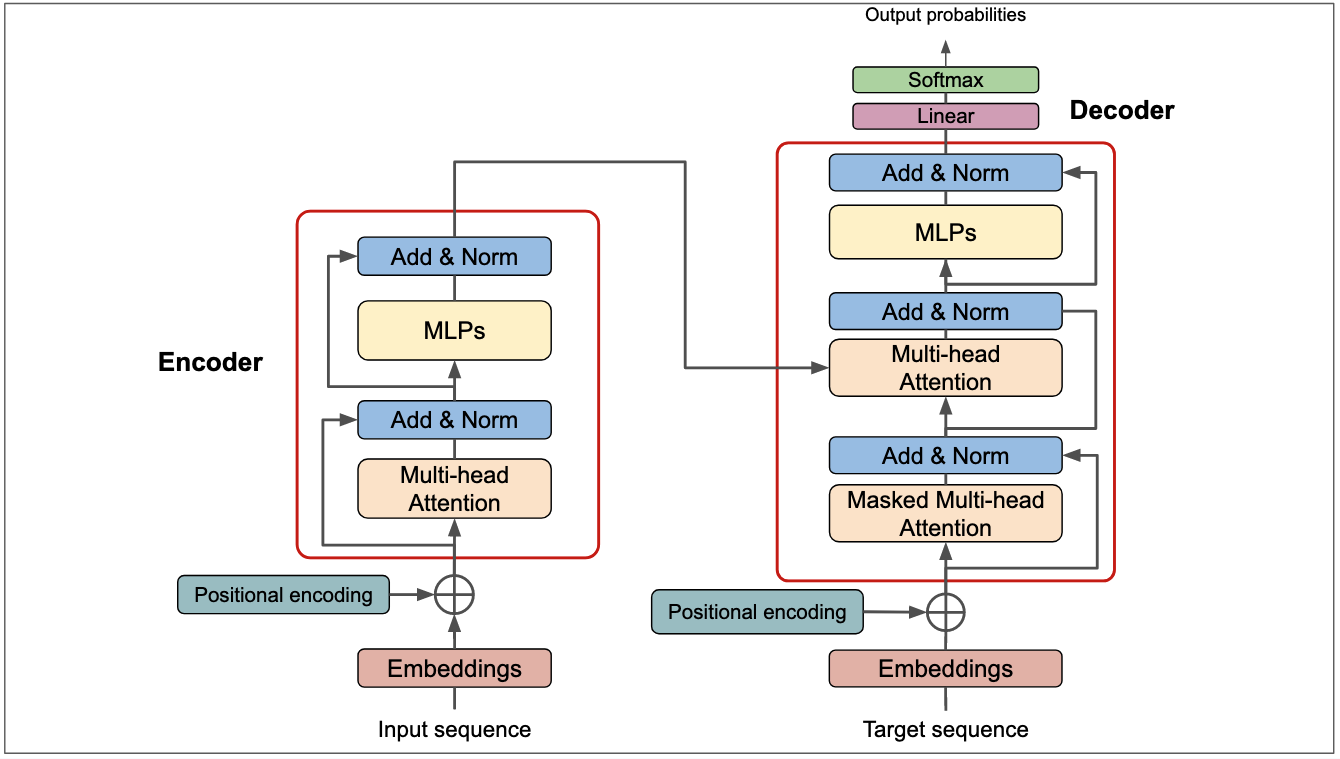

- Fine-Tuning: Utilise frameworks like Hugging Face Transformers or TensorFlow for fine-tuning pre-trained models on domain-specific datasets. Implement hyperparameter optimisation techniques using libraries like Optuna or Ray Tune.

- Embedding and Vector Stores: Use vector databases (e.g., Faiss, Milvus) to store embeddings generated from models for similarity search, recommendation systems, or semantic retrieval tasks.

- Feature Stores: Implement a feature store (e.g., Feast) to manage and serve features consistently during training and inference, ensuring data consistency and reducing duplicate work.

-

Resource Layer:

- Data Ingestion: Build robust data pipelines using tools like Apache Airflow or Prefect to automate data extraction, transformation, and loading (ETL) processes. Support various data sources including databases, APIs, and file systems.

- Data Preparation: Leverage data processing frameworks like pandas, Dask, or Apache Spark to handle large-scale data transformations. Ensure data is cleaned, normalized, and split appropriately for training and validation.

- Model Serving: Deploy models using scalable serving frameworks like TensorFlow Serving, TorchServe, or Kubernetes with serverless functions. Optimise for low latency and high throughput requirements.

- Hardware Acceleration: Utilise specialised hardware (GPUs, TPUs) for training and inference to improve performance. Implement resource scheduling to allocate computational resources efficiently.

Architectural Diagram

The diagram illustrates the interaction between the layers and how data and models flow through the system.

Implementation Guidelines

Development Best Practices

-

Code Reproducibility: Use environment management tools like Conda or Docker to ensure that code runs consistently across different machines.

-

Collaboration: Adopt code review practices and collaborative platforms (e.g., JupyterHub) to facilitate teamwork among data scientists and engineers.

Integration Strategies

- Continuous Training Pipelines: Implement MLOps practices by automating the retraining of models when new data arrives or when performance degrades.

Automated Testing: Include tests for data validation, model accuracy, and performance benchmarks in the CI pipeline.

Observability Enhancements

-

Model Explainability: Integrate tools like SHAP or LIME to provide insights into model predictions, which is crucial for debugging and regulatory compliance.

-

Security Monitoring: Implement security practices to monitor for unauthorized access or anomalous activities within the AI platform.

Augmentation Techniques

-

Transfer Learning: Leverage pre-trained models to reduce training time and improve performance on specific tasks.

-

Experiment Tracking: Use tools like Weights & Biases or MLflow to track experiments, parameters, and results systematically.

Resource Optimization

-

Scalability: Design the infrastructure to scale horizontally or vertically based on workload demands, utilizing cloud services like AWS Auto Scaling or Kubernetes clusters.

-

Cost Management: Monitor resource utilization and optimize for cost-efficiency by selecting appropriate instance types and using spot instances where feasible.

Benefits of the Reference Architecture

This reference architecture offers several key benefits:

- Scalability: Accommodates growing data volumes and computational demands through flexible resource allocation and cloud-native principles.

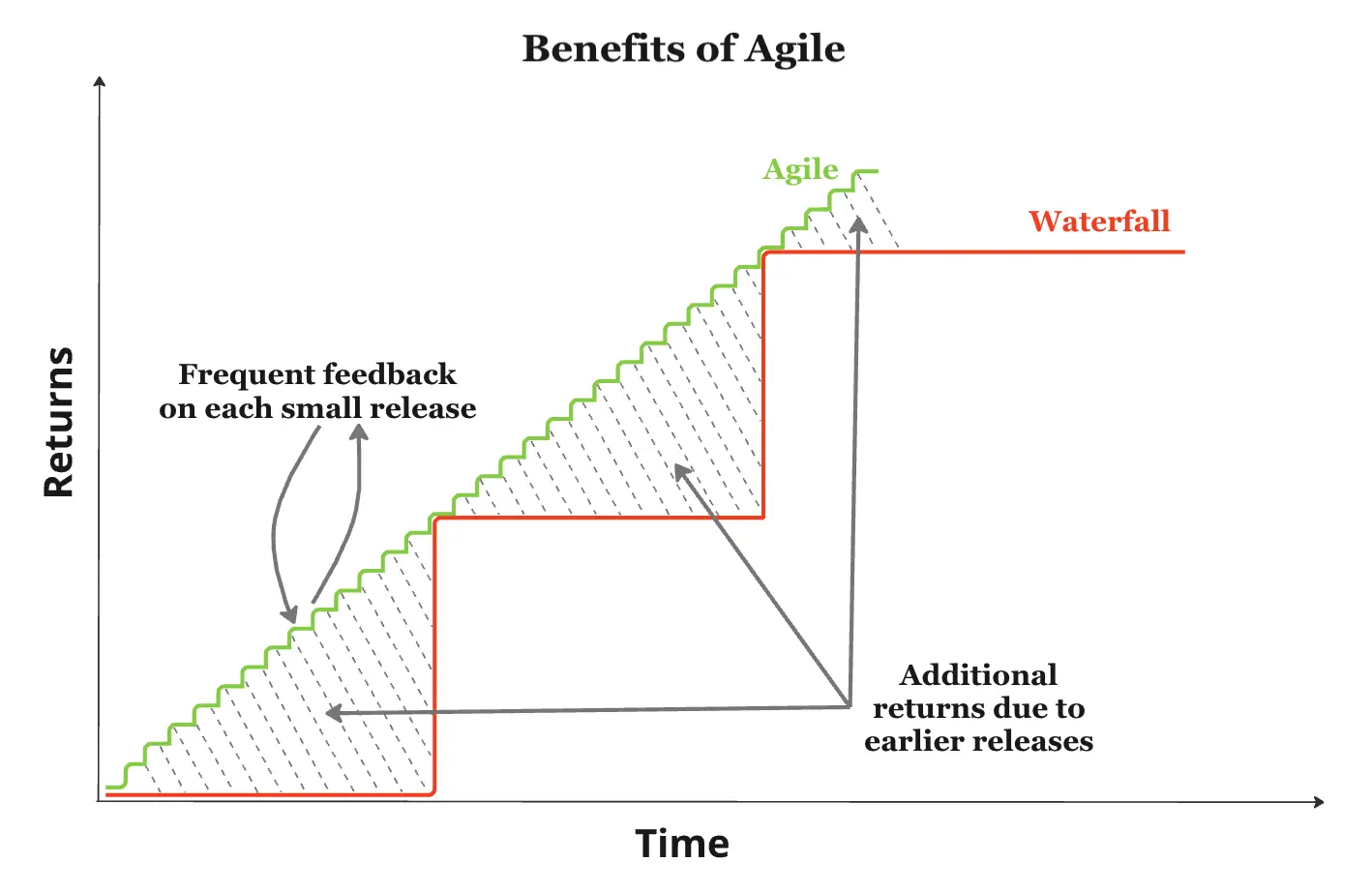

- Agility: Enables rapid deployment and updates of AI models and applications, fostering quick responses to changing business needs.

- Cost Efficiency: Leverages cloud resources and automation to optimize costs associated with infrastructure, storage, and manual effort.

- Enhanced Collaboration: Promotes collaboration between data scientists, developers, and operations teams through shared tools and processes.

- Improved Data Management: Provides robust data management solutions for handling, preparing, and processing large datasets for AI workloads.

- Stronger Compliance and Security: Incorporates security and compliance best practices to protect sensitive data and adhere to regulations.

- Continuous Monitoring and Logging: Ensures system health and stability through comprehensive monitoring and logging capabilities.

- Specialized AI Capabilities: Supports advanced AI functionalities like model fine-tuning, embedding, and adaptation to specific tasks.

- Future-proofing: Provides a flexible and adaptable framework for integrating new AI technologies and methodologies as they emerge.

In summary, with this AI Platform Reference Architecture, AI engineers and data scientists can accelerate the development and deployment of AI models. The architecture addresses key challenges such as scalability, reproducibility, and maintainability, enabling teams to focus on delivering high-quality AI solutions.

Ready to take the next step in your AI platform? Our team of experts is here to help you navigate the complexities of building and evolving AI platforms. We’ll work with you to set up an AI platform tailored to your organization’s needs, ensuring your AI platforms achieve the maturity required for long-term success. Contact us today to unlock the full potential of your AI platforms.